Lumi: Accelerating Pre-Sales with GenAI, Data Integration, and State Management

Lumi solves the inefficiencies of early-stage customer engagement by combining advanced language models with internal data integrations to create a seamless, automated pre-sales process. Designed to guide prospects through essential stages—identifying their needs, providing tailored explanations of past projects and capabilities, and scheduling meetings with sales representatives—Lumi accelerates the sales cycle and improves lead quality. Built with a robust technical foundation, it leverages stateful workflows, internal APIs, and cutting-edge language models to deliver accurate, context-aware interactions that set the stage for successful customer relationships.

This post dives into the technical architecture, data integrations, and state management logic behind our GenAI agent—demonstrating how it delivers a more efficient, automated pre-sales pipeline while ensuring data security and compliance.

Technical Architecture of the GenAI Pre-Sales Bot

The core of our pre-sales agent is built around a modular and flexible architecture that integrates Large Language Models (LLMs) through LangChain. While we currently leverage the capabilities of OpenAI’s GPT-4o model for its advanced reasoning and coherence, our LangChain-based setup enables us to swap in alternative LLMs as performance, cost, or feature considerations evolve. Including in-house deployment to avoid data leaving your environments.

Language Understanding and Reasoning

At present, GPT-4o handles natural language understanding and response generation. By wrapping the model in LangChain components, we maintain a clean abstraction layer—ensuring that switching to other LLMs (from open-source options to enterprise-grade specialized models) is straightforward. This approach secures our investment against rapid changes in the LLM market and ensures long-term adaptability.

Data Access Layer via Internal APIs

A key part of the agent's intelligence comes from its ability to retrieve relevant data on-demand. Our system calls internal APIs to retrieve project histories, customer, and consultant resumes. By feeding this retrieved information into the model’s context through LangChain, the agent can blend general language capabilities with up-to-date, domain-specific knowledge.

State and Conversation Management

A lightweight state machine, implemented using LangGraph, tracks the conversation’s phase. Whether the user is in the “needs discovery” stage, the “company background” stage, or the “meeting scheduling” stage, the agent's prompts and actions adjust accordingly. This ensures the model’s responses remain aligned with the conversation flow and business logic.

Scalable, Modular Deployment

Containerized deployment on our cloud infrastructure, combined with flexible orchestration tools, ensures that scaling the agent is as simple as adding more instances. Built-in caching, load balancing, and observability components help maintain performance under varying traffic loads. The LangGraph integration also keeps our tech stack modular, allowing the introduction of new APIs, data sources, or models without rewriting large amounts of code.

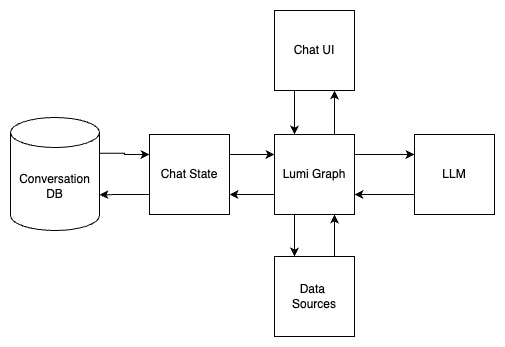

High Level Architecture

The following diagram is a high level architecture of the system:

- Chat UI: A React-based web application built on Next.js. It acts as the interface for users to interact with the assistant, presenting outputs from the Lumi Graph and capturing user inputs.

- Lumi Graph: The central logic of the assistant. It determines the appropriate LLM prompts, selects tools, and manages the conversation phases. Lumi Graph interacts with other components to ensure accurate and context-aware responses while updating the Chat State as needed.

- LLM (Large Language Model): The LLM interprets prompts from Lumi Graph, combines them with the chat history, and generates relevant responses. Additionally, it summarizes conversations and identifies the current conversation phase for seamless interaction.

- Data Sources: These are external or internal sources of real-time information. Lumi Graph fetches relevant data from these sources at the request of the LLM, enhancing responses with up-to-date context.

- Chat State: This component keeps track of the conversation history and manages the conversation’s state, ensuring consistency across interactions

- Conversation DB: A persistence layer for the Chat State, allowing users to continue their conversations at any time by retrieving the saved state.

Phase Detection and Workflow Management

The agent's ability to identify and adapt to different phases of the pre-sales conversation is critical to providing relevant, timely responses. By structuring the interaction into distinct phases—such as discovering the prospect’s needs, sharing company and project information, and ultimately scheduling a meeting—we ensure a logical progression and a more streamlined user experience.

Defining Phases and Triggers

Each conversation phase—Needs Discovery, Company/Project Info, and Meeting Scheduling—is defined with clear entry and exit conditions. For example, when the user asks about previous projects or technologies, the bot transitions from customer needs discovery to describing previous related projects using tools. Similarly, once the prospect shows readiness, the conversation shifts toward meeting scheduling.

State Machine Backed by LangGraph

Using LangGraph’s state management features, we maintain a structured representation of the conversation as it unfolds. LangGraph helps us store relevant data, track user intent, and seamlessly guide the flow from one phase to the next. This ensures that the Large Language Model is always provided with the appropriate context, improving the coherence and precision of responses.

Context-Aware Prompts and Logic

Depending on the conversation state, the agent's prompts and logic differ. During the Needs Discovery phase, it focuses on open-ended questions to uncover use cases. In the Company/Project Info phase, it draws from internal APIs to present relevant case studies and team expertise. As the conversation moves into Meeting Scheduling, the agent moves to summarize the conversation and gather availability via a more traditional user form. At each step, contextually tailored prompting ensures consistent, actionable responses.

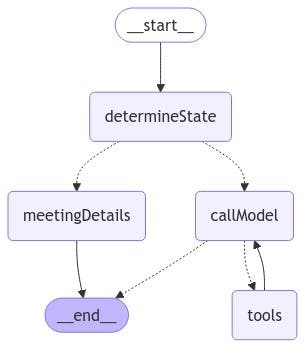

The following is a LangGraph representation of what happens every time a message is sent from the user to the agent. The following actions are performed before composing a response:

- determineState: This node leverages the LLM to analyze the last few messages in the conversation history. It identifies the current state of the conversation, guiding the system to select the appropriate prompts and tools for the next steps.

- meetingDetails: Once the conversation achieves its intended goal, the LLM extracts key information from the dialogue (e.g., name, project summary, email address, company details). This data is then used to generate a form, allowing the user to request a meeting with the sales team.

- callModel & tools: Based on the state identified by determineState, the LLM is invoked with the corresponding prompt and a list of tools. If necessary, the LLM directs specific tools to be executed, retrieves their results, and updates the response accordingly.

APIs and Tools Integration

A key strength of our pre-sales GenAI agent lies in its ability to seamlessly integrate with various internal services—ranging from project databases to consultant directories. Through these integrations, the agent dynamically enriches its responses with precise, real-time information.

Internal Data APIs

Dedicated endpoints supply the agent with current project portfolios, customer references, and consultant profiles. By retrieving this data on-demand, the bot can confidently address a prospect’s inquiries, highlighting domain expertise or similar success stories relevant to their needs.

LangGraph-Orchestrated Workflows

LangGraph provides a robust framework for orchestrating these data retrieval steps. As the user’s journey progresses through the conversation phases, LangGraph helps trigger the appropriate API calls at the right moments. For example, during the Company/Project Info phase, the system automatically fetches and inserts relevant case study summaries into the model’s context. Later, when moving to Meeting Scheduling, LangGraph is used to assist in creating a summary and presenting an availability form to the user.

Contextual Integration with the LLM

After obtaining the necessary data from internal APIs, the agent merges the information into the LLM’s prompt context. This enables the model to reference recent project successes or propose a suitable consultant without the user having to browse a static resource. The synergy of real-time data access with advanced language modeling ensures highly specific, contextually aware responses.

User Interface and Interaction Enhancements

While the underlying architecture ensures accurate data retrieval and context-aware responses, the final user experience is also shaped by how information is presented. Moving beyond basic markdown text responses, we’ve introduced more interactive UI components to make the conversation not only informative, but also more intuitive and visually appealing.

Card-Based Data Presentation

When a prospect asks about a specific project or a consultant’s expertise, the agent dynamically displays a card containing key details. This format includes project summaries, and consultant bios, all structured in a clean and readable layout. By placing relevant data front and center, these cards help prospects quickly assess whether our offerings align with their needs—enhancing clarity and reducing cognitive load.

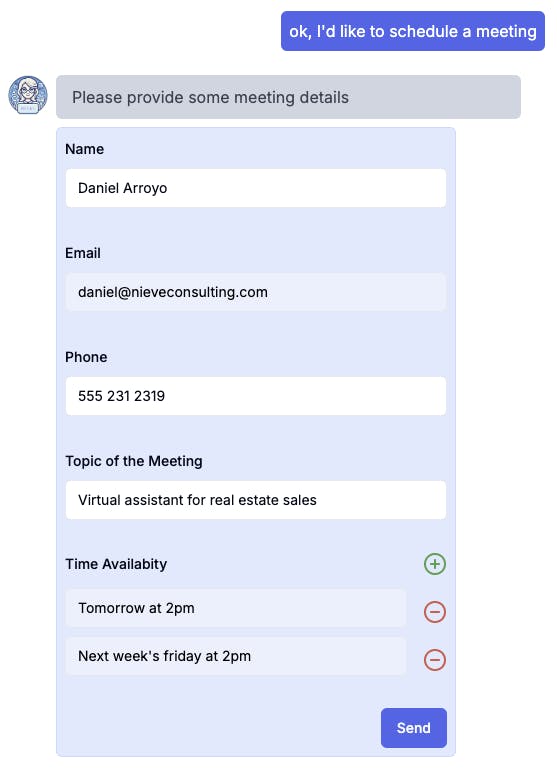

Interactive Scheduling Forms

As the conversation transitions into the Meeting Scheduling phase, the user is presented with an interactive form that includes pre-filled fields drawn from earlier interactions. For example, details like the user’s company name, project summary, or preferred project timeline are automatically populated. This reduces friction and makes it easier for the prospect to finalize a meeting time without repeating information they’ve already provided.

Seamless Integration With Backend Workflows

These UI elements, such as cards and forms, are rendered in sync with LangGraph’s conversation state machine and the LLM’s responses. As soon as the data retrieval APIs return relevant information, the agent's frontend layer updates the interface. Likewise, once the user completes a scheduling form, the same logic and APIs process the request and send an email to our team with the relevant details —closing the loop between back-end intelligence and front-end usability.

Conclusion and Future Outlook

By integrating a Large Language Model with stateful workflows, internal data APIs, and a rich user interface, our GenAI-powered pre-sales agent demonstrates how intelligent conversation agents can transform the sales pipeline. Yet the potential extends far beyond this initial application.

The same architecture and workflow principles can be applied to other stages of the customer lifecycle, from onboarding to post-implementation support. Different data sources—such as engineering knowledge bases, partner integrations, or even product usage analytics—can be brought in to enrich the conversation and guide users toward more informed decisions. Additional phases or subflows can be introduced, expanding the agent's utility across diverse contexts, like vendor comparisons, solution design brainstorming, or technical troubleshooting sessions.

This agent platform can be easily reconfigured or scaled for new domains. The modular nature of the architecture, the flexible workflow management via LangGraph, and the clean separation of UI components ensure that enhancements do not require starting from scratch. Instead, we can continually adapt, refine, and extend the system—creating a foundation that not only accelerates pre-sales but also supports the full spectrum of interactions between our company and its customers.

Chief Technical Officer